浏览 3.5k

1 概述

本文将演示如何利用NGINX Plus的动态配置API实现动态添加或删除使用Apache ZooKeeper注册的负载均衡服务器。

如上图所示,Demo环境组成:

1. NGINX Plus:为demo app提供负载均衡服务。

2. ZooKeeper:服务注册和发现

3. Demo应用:演示应用,可横向扩展

4. 注册器Registrator

a) 自动发现服务变化,注册到ZooKeeper

NGINX Plus容器监听端口80,内置NGINX Plus Dashboard监听端口8080。ZooKeeper容器侦听端口2181、2888和3888。

Registrator监视Docker以查找使用公开端口启动的新容器,并向ZooKeeper注册相关服务。通过在容器中设置环境变量,我们可以更明确地了解如何向ZooKeeper注册服务。对于每个demo app的hello‑world容器,我们将SERVICE_TAGS环境变量设置为production,以将容器标识为NGINX Plus的上游服务器,以实现负载均衡。当一个demo app容器退出或被移除时,Registrator会自动从ZooKeeper中移除其相应的Znode条目。

最后,通过一个用Ruby编写并包含在示例演示中的工具zk-tool,我们使用ZooKeeper watches在每次注册的服务容器列表发生更改时触发一个外部处理程序(script.sh)。这个bash脚本获取所有当前NGINX Plus上游服务器的列表,使用zk-tool循环遍历所有注册到ZooKeeper的容器,这些容器被标记为production,并使用动态配置API将它们添加到NGINX Plus上游组(如果它们尚未列出)。然后,它还会从NGINX Plus上游组中删除任何未在ZooKeeper中注册的带有生产标记的容器。

2 环境准备

2.1 Clone demo repo

克隆NGINX demo 项目:

$ git clone https://github.com/nginxinc/NGINX-Demos.git

2.2 准备NGINX Plus证书

复制NGINX证书文件nginx-repo.key和nginx-repo.crt 文件到目录 ~/NGINX-Demos/zookeeper-demo/nginxplus/ 。

2.3 部署演示环境容器

拉起zookeeper、Registrator 和NGINX Plus 容器:

$ docker-compose up -d

完成后,确认容器状态:

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]# docker-compose -f create-http-service.yml up -d

WARNING: Found orphan containers (registrator, nginxplus, zookeeper) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

Pulling http (nginxdemos/hello:latest)...

latest: Pulling from nginxdemos/hello

550fe1bea624: Pull complete

d421ba34525b: Pull complete

fdcbcb327323: Pull complete

bfbcec2fc4d5: Pull complete

0497d4d5654f: Pull complete

f9518aaa159c: Pull complete

a70e975849d8: Pull complete

Digest: sha256:f5a0b2a5fe9af497c4a7c186ef6412bb91ff19d39d6ac24a4997eaed2b0bb334

Status: Downloaded newer image for nginxdemos/hello:latest

Creating zookeeper-demo_http_1 ... done

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#3 验证

3.1 Zookeeper服务

配置Zookeeper服务:

[root@centos32 zookeeper]#

[root@centos32 zookeeper]# docker exec -ti zookeeper ./zk-tool create /services -d abc

Created /services as ephemeral=false with data: abc

[root@centos32 zookeeper]#

[root@centos32 zookeeper]#

观察Zookeeper服务及NGINX Plus upstream配置:

[root@centos32 zookeeper]#

[root@centos32 zookeeper]# docker exec -ti zookeeper ./zk-tool watch-children /services/http

2021-03-28 03:23:12 +0000

============================

NGINX upstreams in backend:

[]

Servers registered with ZK:

10.1.10.32:32768扩展demo app的容器数量:

$ docker-compose -f create-http-service.yml up -d --scale http=5

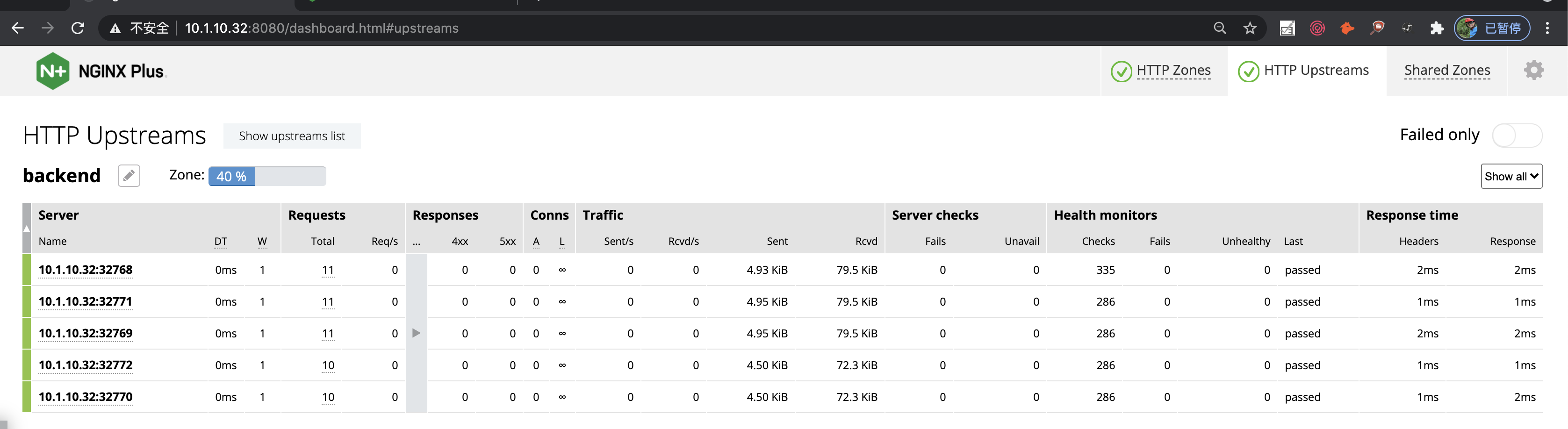

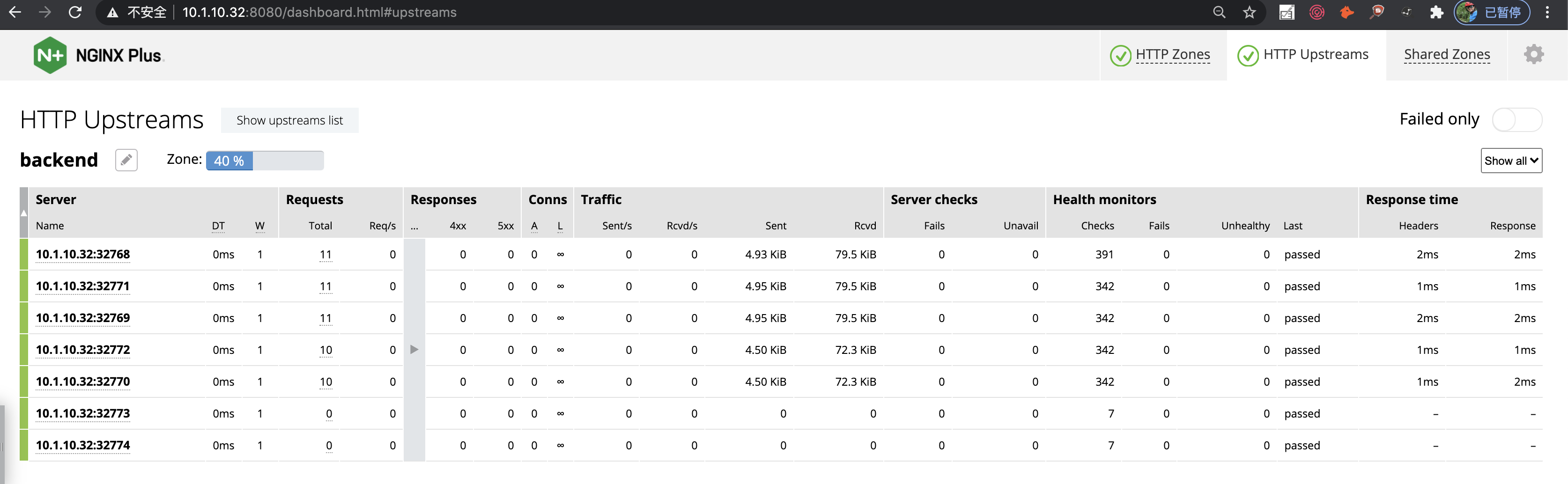

$ docker-compose -f create-http-service.yml up -d --scale http=73.2 NGINX Plus upstream

扩展demo app的容器数量,观察NGINX upstream的配置变化。

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]# docker-compose -f create-http-service.yml up -d --scale http=5

WARNING: Found orphan containers (registrator, zookeeper, nginxplus) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

Creating zookeeper-demo_http_2 ... done

Creating zookeeper-demo_http_3 ... done

Creating zookeeper-demo_http_4 ... done

Creating zookeeper-demo_http_5 ... done

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]# docker-compose -f create-http-service.yml up -d --scale http=7

WARNING: Found orphan containers (registrator, zookeeper, nginxplus) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

Creating zookeeper-demo_http_6 ... done

Creating zookeeper-demo_http_7 ... done

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]# docker-compose -f create-http-service.yml up -d --scale http=3

WARNING: Found orphan containers (zookeeper, registrator, nginxplus) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

Stopping and removing zookeeper-demo_http_4 ... done

Stopping and removing zookeeper-demo_http_5 ... done

Stopping and removing zookeeper-demo_http_6 ... done

Stopping and removing zookeeper-demo_http_7 ... done

[root@centos32 zookeeper-demo]#

[root@centos32 zookeeper-demo]#3.3 扩缩信息示例

[root@centos32 ~]#

[root@centos32 ~]# docker exec -ti zookeeper ./zk-tool watch-children /services/http

2021-03-28 06:29:12 +0000 ------- 部署3个容器

============================

NGINX upstreams in backend:

[]

Servers registered with ZK:

10.1.10.32:32769

{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}Added 10.1.10.32:32769 to the nginx upstream group backend!

10.1.10.32:32768

{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}Added 10.1.10.32:32768 to the nginx upstream group backend!

10.1.10.32:32770

{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}Added 10.1.10.32:32770 to the nginx upstream group backend!

"10.1.10.32:32769" matches zk entry 10.1.10.32:32769

"10.1.10.32:32768" matches zk entry 10.1.10.32:32768

"10.1.10.32:32770" matches zk entry 10.1.10.32:32770

============================

2021-03-28 06:30:19 +0000 ------- 扩展到5个容器

============================

NGINX upstreams in backend:

[{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}]

Servers registered with ZK:

10.1.10.32:32774

{"id":13,"server":"10.1.10.32:32774","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}Added 10.1.10.32:32774 to the nginx upstream group backend!

10.1.10.32:32773

{"id":14,"server":"10.1.10.32:32773","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}Added 10.1.10.32:32773 to the nginx upstream group backend!

10.1.10.32:32769

10.1.10.32:32768

10.1.10.32:32770

"10.1.10.32:32769" matches zk entry 10.1.10.32:32769

"10.1.10.32:32768" matches zk entry 10.1.10.32:32768

"10.1.10.32:32770" matches zk entry 10.1.10.32:32770

"10.1.10.32:32774" matches zk entry 10.1.10.32:32774

"10.1.10.32:32773" matches zk entry 10.1.10.32:32773

============================

2021-03-28 06:30:37 +0000 ------- 收缩到3个容器

============================

NGINX upstreams in backend:

[{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":13,"server":"10.1.10.32:32774","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":14,"server":"10.1.10.32:32773","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}]

Servers registered with ZK:

10.1.10.32:32769

10.1.10.32:32768

10.1.10.32:32770

"10.1.10.32:32769" matches zk entry 10.1.10.32:32769

"10.1.10.32:32768" matches zk entry 10.1.10.32:32768

"10.1.10.32:32770" matches zk entry 10.1.10.32:32770

[{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":14,"server":"10.1.10.32:32773","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}]Removed "10.1.10.32:32774" # 13 from NGINX upstream block backend!

[{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}]Removed "10.1.10.32:32773" # 14 from NGINX upstream block backend!

============================

2021-03-28 06:30:39 +0000

============================

NGINX upstreams in backend:

[{"id":10,"server":"10.1.10.32:32769","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":11,"server":"10.1.10.32:32768","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false},{"id":12,"server":"10.1.10.32:32770","weight":1,"max_conns":0,"max_fails":1,"fail_timeout":"10s","slow_start":"0s","route":"","backup":false,"down":false}]

Servers registered with ZK:

10.1.10.32:32769

10.1.10.32:32768

10.1.10.32:32770

"10.1.10.32:32769" matches zk entry 10.1.10.32:32769

"10.1.10.32:32768" matches zk entry 10.1.10.32:32768

"10.1.10.32:32770" matches zk entry 10.1.10.32:32770

============================

按点赞数排序

按时间排序

微信公众号

微信公众号 加入微信群

加入微信群